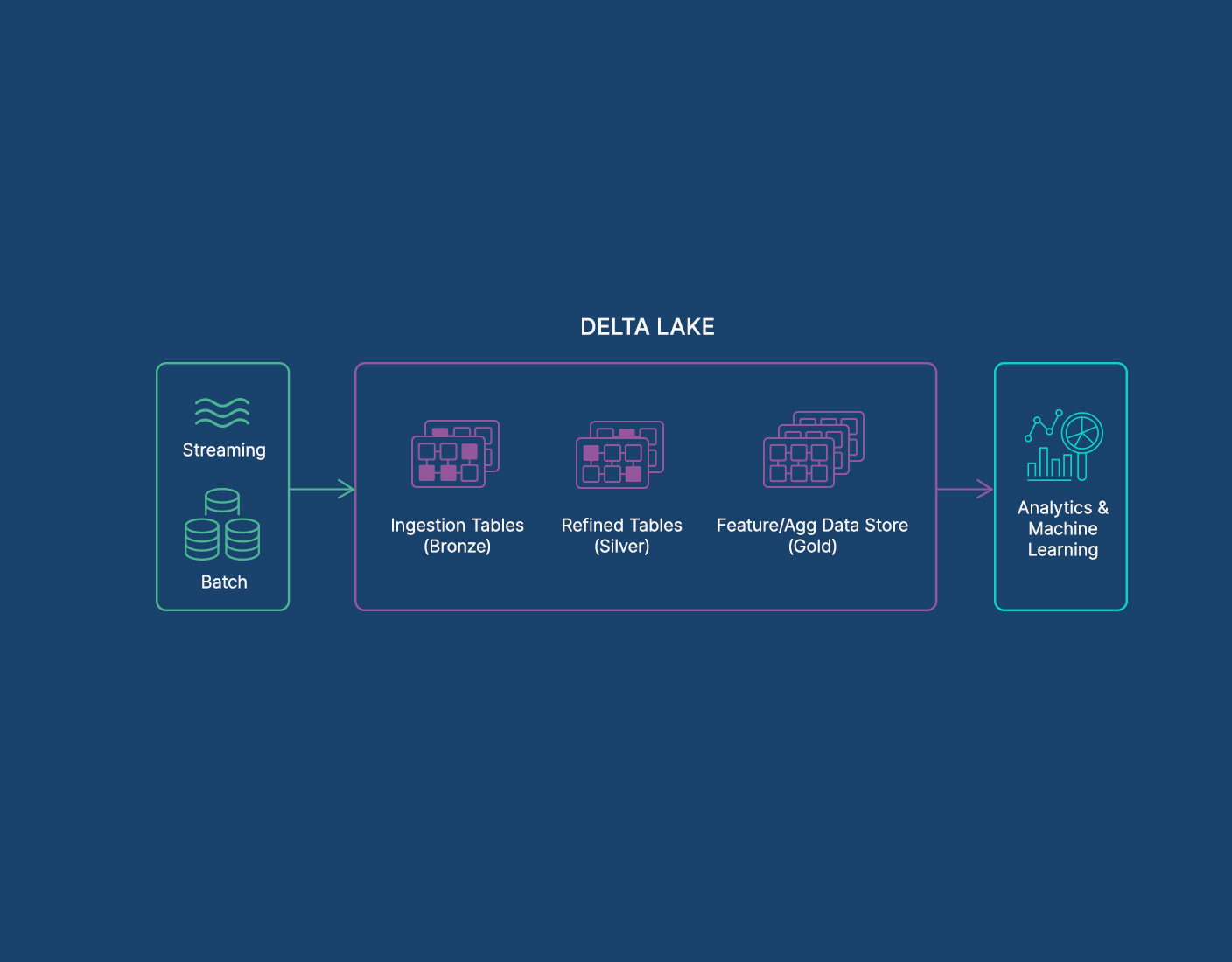

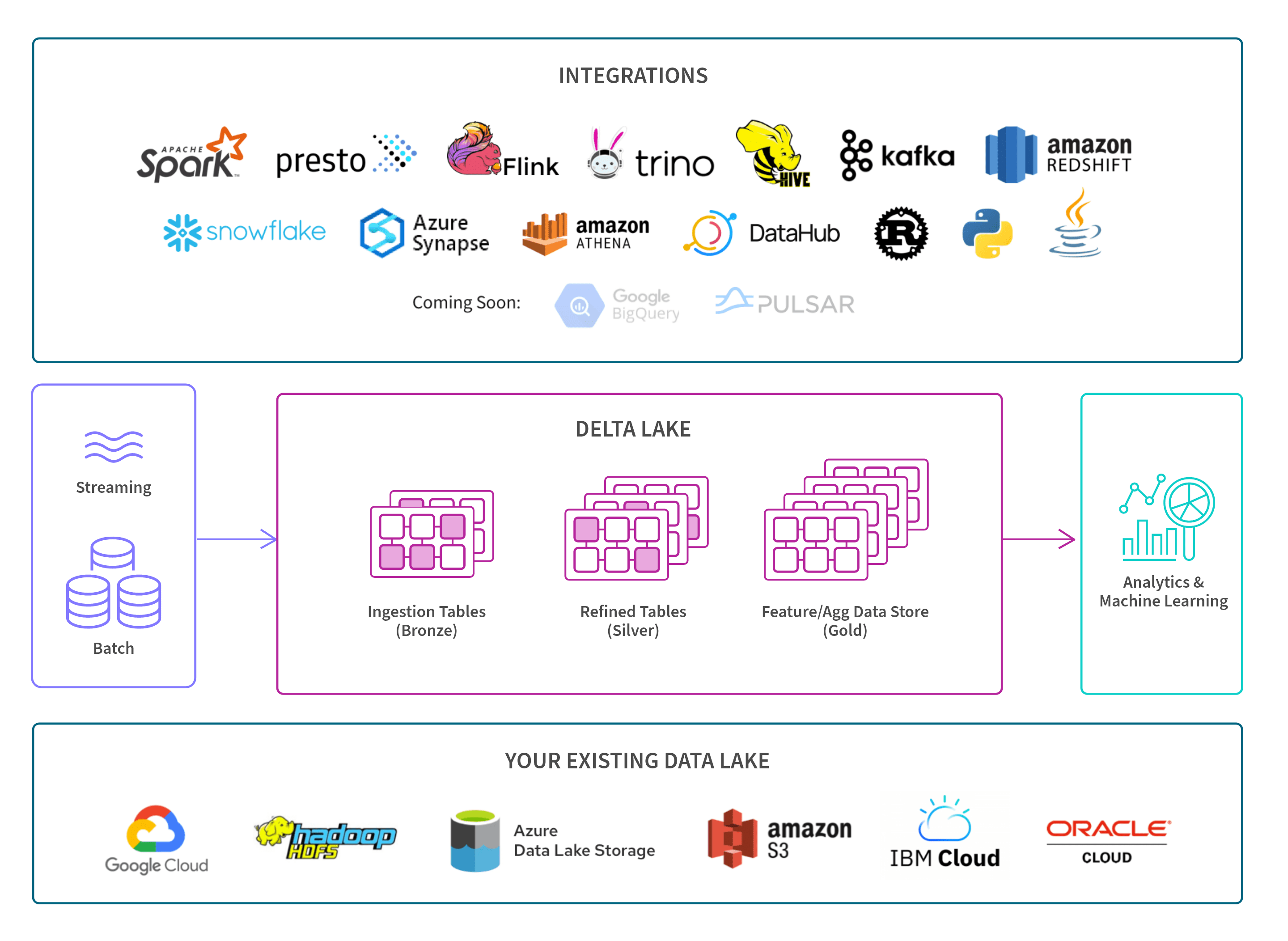

Delta Lake Benefits

A Delta Lake offers your organization many benefits and use cases, such as the following:

Data Integrity and Reliability: It ensures data integrity and reliability during read and write operations through support for ACID transactions (Atomicity, Consistency, Isolation, Durability). This ensures data consistency, even with concurrent writes and failures.

Data Quality and Consistency: It maintains data quality and consistency by enforcing a schema on write.

Auditability and Reproducibility: It supports version control and time travel. This means that it enables querying data as of a specific version or time, facilitating auditing, rollbacks, and reproducibility, supported by its versioning feature.

Operational Efficiency: It seamlessly integrates batch and streaming data processing, providing a unified platform for both, supported by its compatibility with Structured Streaming.

Performance and Scalability: It effectively manages metadata for large-scale data lakes, optimizing operations such as reading, writing, updating, and merging data. It achieves this through techniques like compaction, caching, indexing, and partitioning. Additionally, it leverages the power of Spark and other query engines to process big data efficiently at scale, improving data processing speeds.

Flexibility and Compatibility: Databricks Delta Lake preserves the flexibility and openness of data lakes, allowing users to store and analyze any type of data, from structured to unstructured, using any tool or framework of their choice.

Secure Data Sharing: Delta Sharing is an open protocol for secure data sharing across organizations.

Security and compliance: It ensures the security and compliance of data lake solutions with features such as encryption, authentication, authorization, auditing, and data governance. It also supports various industry standards and regulations, such as GDPR and CCPA.

Open Source Adoption: It’s completely backed by an active open source community of contributors and adopters.