Earlier today, Qlik announced its expanded role as a Snowflake Cortex launch partner, and this article explains how the two solutions work together to benefit our customers. Let’s begin by quickly reviewing Snowflake Cortex.

What is Snowflake Cortex?

Snowflake Cortex is an all-in-one service that helps you leverage Generative AI (Gen AI) and Machine Learning (ML) with the data stored in your Snowflake cloud. The Gen AI capabilities are excellent for tasks like summarizing documents, translating languages, or generating text answers from questions. Conversely, the ML functions offered as SQL operators are perfect for automatically detecting patterns and generating predictions that provide deep insights. However, a recent McKinsey blog post stated, "If your data isn't ready for generative AI, your business isn't ready for generative AI." Therefore, to get AI right using Snowflake Cortex, we must first ensure that our input data is accurate, timely and of high quality. The remainder of this post will concentrate on getting data right for the Gen AI use case.

Unstructured, Structured, or Both?

Providing good-quality, timely data from unstructured and structured sources to Snowflake Cortex is essential to increasing data diversity and reducing bias for your RAG (retrieval augmented generation) applications. Indeed there are several reasons why you might consider using Snowflake for your own corpus that supplements the pre-trained LLM:

Domain Specificity: Your RAG application can now generate answers based on your own company domain.

Control Over Content: Building your own corpus allows you to ensure the information aligns with your application's goals and avoids perpetuating biases that might be present in external sources.

Integration with Existing Data: As previously mentioned, you already have valuable data sources relevant to your RAG application. Building a corpus incorporating this data alongside curated external information allows you to leverage your unique data assets and create a more comprehensive knowledge base for your RAG model.

Privacy Considerations: If your RAG application deals with sensitive data, then relying uploading data to a public provider might raise privacy concerns. Building your own corpus within a Snowflake VPC allows you to control data access and ensure compliance with relevant privacy regulations.

Evolving Knowledge Base: Some domains have rapidly evolving knowledge. Frequently updating your own corpus allows you to update and incorporate new information more easily, thus ensuring your RAG model stays current with your.

However, acquiring, preparing, and aggregating the source data can require significant manual coding efforts, so data professionals look to Qlik for help.

Beyond Data Ingest. Automated Data Transformation Pipelines.

Qlik Talend’s Data Integration and Quality solution efficiently delivers trusted data to warehouses, lakes, or other enterprise data platforms. While our enterprise data fabric platform offers many features that improve data engineer productivity, our AI-augmented, no-code pipelines are specifically optimized for working with Snowflake.

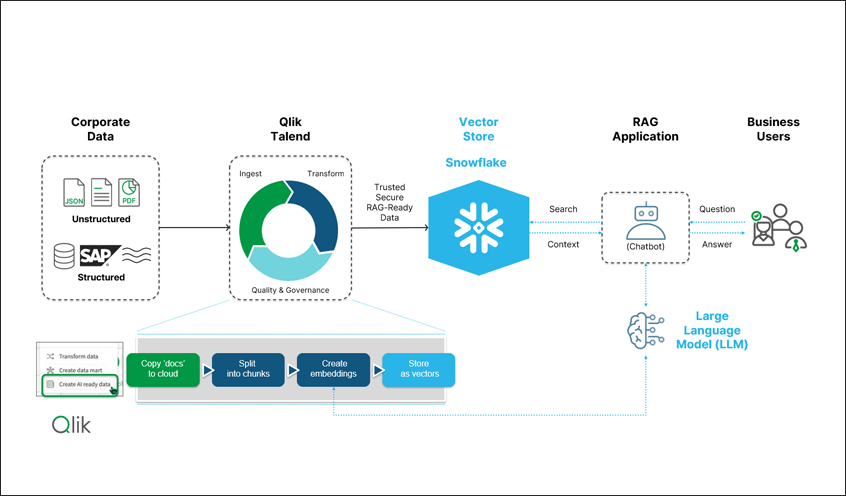

Let’s look at a specific example. The diagram below represents a high-level overview of a data pipeline that continually delivers data in near real-time to Snowflake. We see that Qlik Talend builds a pipeline to collect unstructured and structured data from across the enterprise. The no-code pipeline can be flexibly configured to suit particular requirements. For example, maybe you only want to ingest documents from certain authors or time periods. Additionally, you might want to ingest order information from SAP and other cloud or enterprise applications in near real-time too. The source data could also originate from on-premises or in the cloud.

As proof watch the short demonstration below:

Limitless Possibilities with Data

Generative AI has the potential to be a game-changer for many organizations and has the power to redefine business. With a strong, trusted data foundation powered by Qlik Talend and LLM features powered by Snowflake, you can create new applications that go beyond simple automation, generate new product ideas, and analyze customer data with incredible detail. Repetitive tasks like content creation and code generation can be automated, allowing your company to focus on core competencies and invest in areas that drive growth. This democratization of innovation also levels the playing field, allowing smaller companies to compete with larger ones by leveraging the power of data and AI. Consequently, businesses that embrace this technology and adapt their strategies will be well-positioned to thrive in the future.

Conclusion

Qlik is proud to be a preferred launch partner for Snowflake Cortex, and our optimized integration with Snowflake now enables customers to harness the full potential of their data for AI-driven analytics. Qlik’s innovative approach allows enterprises to embed deep learning into their data workflows, enhancing decision-making and operational efficiency with capabilities such as real-time predictive analytics and intelligent data insights

If you’re at Snowflake Summit this week, stop at booth # 1301 to experience Qlik’s optimized Snowflake integration. If you couldn’t make it to San Franciso, then you can still discover how the enhanced capabilities of Qlik can revolutionize your data management and analytics strategies within the Snowflake environment. For more detailed information visit our dedicated Snowflake page.

In this article:

Industry Viewpoints