Making operational decisions in a tight timeframe is critical to the success of an organization. Real-time data ingestion enables quicker data availability, in turn enabling timely decision-making.

Real-time ingestion is foundational to our digital transformation at Discover Financial Services. As a senior manager leading the streaming and real-time data platforms at Discover, I don’t want to be in the data replication business manually. My goal is to completely free the data to enable data sufficiency by automating as much as possible, allowing our internal customers in Discover’s various divisions to make rapid decisions that benefit the business.

Regaining an Advantage with Cloud Data Fabric Architecture

Discover is a leading digital bank and payment services company with one of the most recognized brands in the US financial services sector. Since its inception in 1985, the company has become one of the largest card issuers in the country. We’re probably most well-known for the Discover card, and we also offer checking and savings accounts, personal, student, and home loans, and certificates of deposit through our banking business. Our mission is to help people spend smarter, manage debt better, and save more money so they can achieve a brighter financial future.

The goal is to free the data by automating as much as possible, allowing end users to make rapid decisions that benefit the business.

To achieve this mission, Discover’s teams require up-to-date data to make timely decisions. Unfortunately, using data from our legacy on-premise data warehouse required file-based ingestion that limited the scope of data sufficiency, timely data availability, and information modeling. Manually creating the ingestion tasks was laborious, time-consuming, and error-prone, with added standardization difficulties. It was a challenge to make data available quickly, and that limited our ability to make timely decisions. Having so much data was an advantage but using a batch-based approach left much of our advantage on the table.

As businesses rely on more data sources than ever before, having a centralized approach to data storage and management has never been more important. Changing end-user expectations around data sufficiency and data availability made it imperative that we modernize and centralize Discover’s data assets in the cloud.

As part of a data ecosystem modernization, a cloud data fabric architecture enables real-time streaming data ingestion from a wide range of source databases.

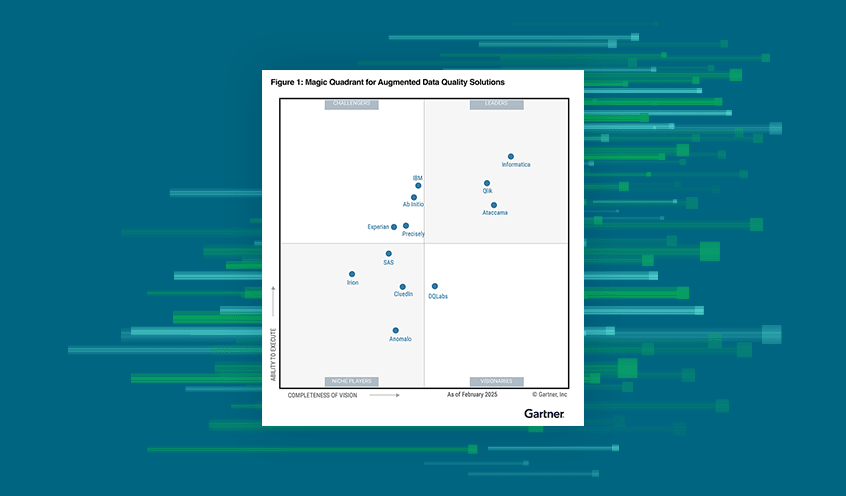

As part of this data ecosystem modernization, we wanted to leverage a cloud data fabric architecture and embrace real-time streaming data ingestion from a wide range of source databases. Qlik was the one platform that stood out for supporting so many cloud and on-premise sources and target endpoints. Another reason we chose Qlik was because it would allow us to customize various add-ons for data tokenization and credentials management—both of which were key to our plans for our new data ecosystem.

A Modernized Architecture Enables Data Streaming

Discover leverages Qlik Replicate, Qlik Enterprise Manager and Qlik Sense, all of which we incorporated into our cloud data fabric. We developed the project in four stages:

Replicate the source data into Snowflake and make it available. Capture the data in the desired format. Build the domain-integrated layer, increasing consistency by standardizing business dimensions. Develop the consumer-optimized layer, building products for the end user to consume the data. These products would include Customer 360, Marketing 360, and many more.

Qlik tools empowered us during this development process by accelerating the data ingestion and streaming across data sources and target endpoints. These tools are now a key gateway in our modern data-engineering platform. Qlik Replicate enables 24/7 data ingestion into the cloud data fabric from more than six critical source databases, on-premise and in the cloud, along with SaaS sources. These sources include mobile and digital assets, events sourced through Kafka, and relational and columnar databases, such as Oracle, DB2, MS SQL Server, MariaDB, Teradata, and Postgres.

Part of our rationale for leveraging a cloud data fabric architecture was to move away from our legacy batch-based ingestion and embrace near real-time data streaming. We focused on extreme automation and ingestion at scale by taking a metadata-driven approach leveraging APIs and microservices, using Qlik Replicate and Qlik Enterprise Manager in an almost OEM fashion by encapsulating Qlik APIs as part of our process.

We then built a front end with a simplified UI to help our data engineers quickly curate the data sets. Data engineers can now choose specific sources to ingest, check the data sensitivity, and mark specific fields for tokenization. Having selected these details, the engineer then deploys the automated Qlik Replicate pipeline, which has helped us onboard thousands of tables in no time.

Our data storage targets are Snowflake, Kafka, and AWS S3. We also run Qlik Replicate on over 18 production servers.

Qlik Drives Efficiency Across the Business

Centralizing and modernizing our data ecosystem in the cloud has allowed us to deliver high-precision, near real-time analytics to our internal customers. By providing a single source of truth for data across the entire organization, we ensure these analytics are not only up to date, but also uniformly accurate—so analytics that one division pulls don’t contradict the analytics that another division wants to use. Our new data architecture has streamlined workflows and improved operational efficiencies for both data engineers and end users. And from my team’s perspective, centralizing the data helps us more effectively access information at various stages in the lifecycle, making it easier to govern and manage.

Our efforts to develop the microservices around Qlik QEM APIs and a data engineer-friendly UI have paid off. The Discover data engineering team has adopted Qlik, and they require no code to set up automated data pipelines. This capability translates to “lights-out” automated ingestion. We have said goodbye to manually ingesting nightly batches. Instead, Discover teams have the timely information they need to take action.

The speed at which data becomes available to end-users truly matters in financial services, especially when considering the value of second-to-second decision-making.

Once we established streaming data ingestion, our business started to rapidly prototype, test, and refine new ideas for various Discover lines and divisions, including cards, rewards, direct banking, and loan applications. Our use cases vary in volume, velocity, and complexity. Our Discover Rewards Program, for example, is one of our key application pipelines, with billions of records propagated from Oracle to the target Snowflake data warehouse.

The speed at which data becomes available to end-users truly matters in financial services, especially when considering the value of second-to-second decision-making to our products. For example, we can detect fraud by running card transaction approvals through this data environment and notify cardholders immediately. Another example is in our call centers, where we have several streams running credit applications for cards, mortgages, and personal and student loans.

Taking This Collaboration Further

We've built a two-way collaboration between Discover and Qlik. We employ various services from Qlik, including Professional Services, relationships with customer success teams, and Platform Production Services. We also collaborate regularly with product engineering teams on co-innovation opportunities. Working so closely with Qlik, we see consistent delivery of our feature requests.

We intend to strengthen this collaboration further as the cloud data fabric’s footprint grows across the enterprise. Part of that growth will come from adding more sources and target endpoints across the multi-cloud. We already use AI in our business, and I plan to extend that use by embedding Qlik’s ML models into fault detection, load balancing, and latency reduction.

Qlik’s Data Integration platform is a critical pillar of our cloud data fabric, and Qlik Replicate is a key component that feeds necessary information for the data science and machine learning platform teams.